Multidisciplinary research is described as a technique of research in which the tools of different sciences and disciplines are utilized to study a specific problem. In this article, we examine how this is done through the multidiscplinary case of recommending contextual music. Recommendation systems are one of the most useful tools for online browsing. With huge catalogues growing everyday, a good recommendation system can save the users hours of search by directly proposing items that are likley to interest the user. This is the case for almost all online catalogues including merchandise, movies, books, and music. Those recommendation systems are being continously developed to accomodate the different user needs. However, each domain requires different designs based on the recommended media.

Music, in particular, is one of the most challenging media to recommend. Music tracks are often short, spanning a duration of few minutes, yet quite complex in their content. People often spend hours listening to music one track after the other. While users have their own listening preferences, they could also enjoy exploring new styles. Hence, music recommenders are designed to provide fast and coherent items, while also infusing new items for exploration. All while being personalized to each user. A pretty complex task.

To make it even harder, music is one of the media most influenced by the user situations/conditions, specially since the development of portable music players. For example, we listen to different music if we are accompanied by friends if we are alone. It is also different music when we are sad or happy, or when we are doing sports or studying. The list of influencing situations is long and can keep going on if we start to think about it. Researchers at crossroad of psychology, information retrieval, and machine learning have been trying to identify these influencing situations and its effects on the listener preferences for music content in order to provide better recommendations.

Crossroad of domains

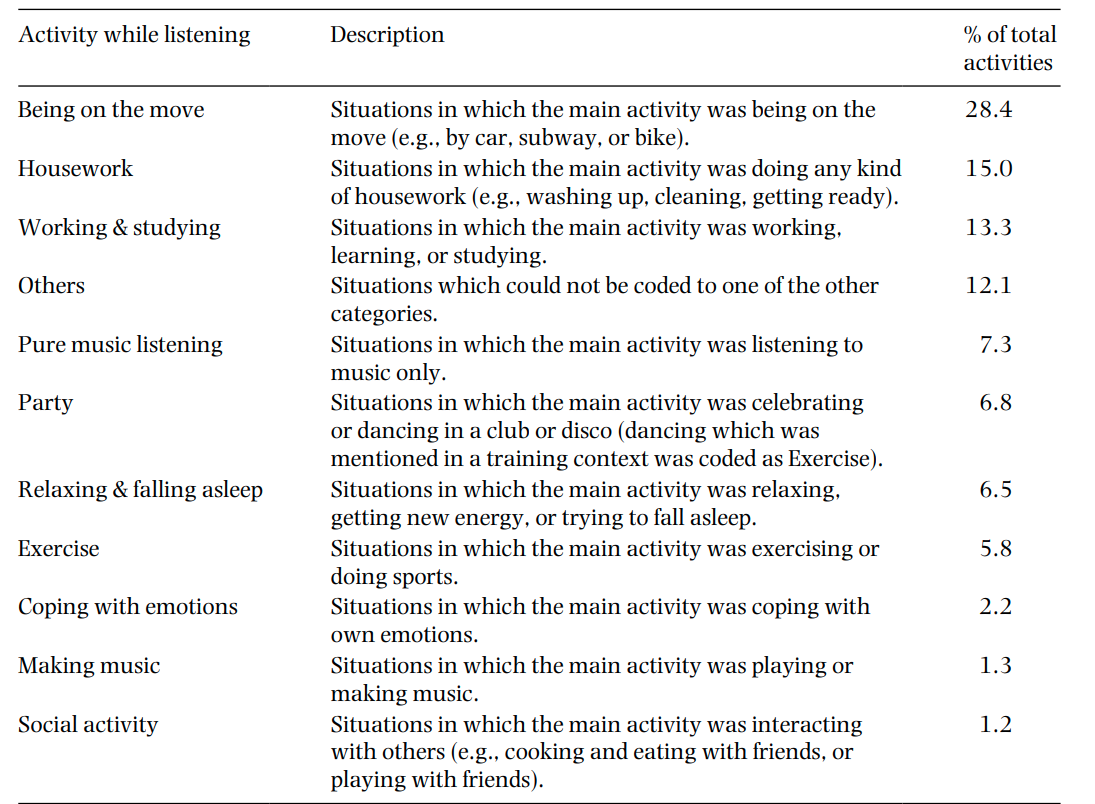

In the psychology domain, researchers focus on understanding how people use music in their daily life. They try to understand how much of our daily engagement with music is attributable to individual characteristics and how much it depends on the listening situations, and which are these situations. Early before the progress in information retrieval, they identified the influence of the listening situation on the listener preferences and tried to categorize it. For example, situations could be categorized as either environment-related or user-related. Environment situations reflect the location, time, weather, season, among others. While user situations included the user activity, mood, social setting, etc. These situations are still independent from the listener’s personal preferences shaped by their personality and culture, which is also a research question being studied. Those studies enable us to narrow down the potential situations and study their impact on our preferences. As an example, the following table shows the results from one of the studies 1 indicating the frequency of different situations that influence our listening preference.

Example of music-listening situations and their frequency of occurance from the study by Greb et al. 1

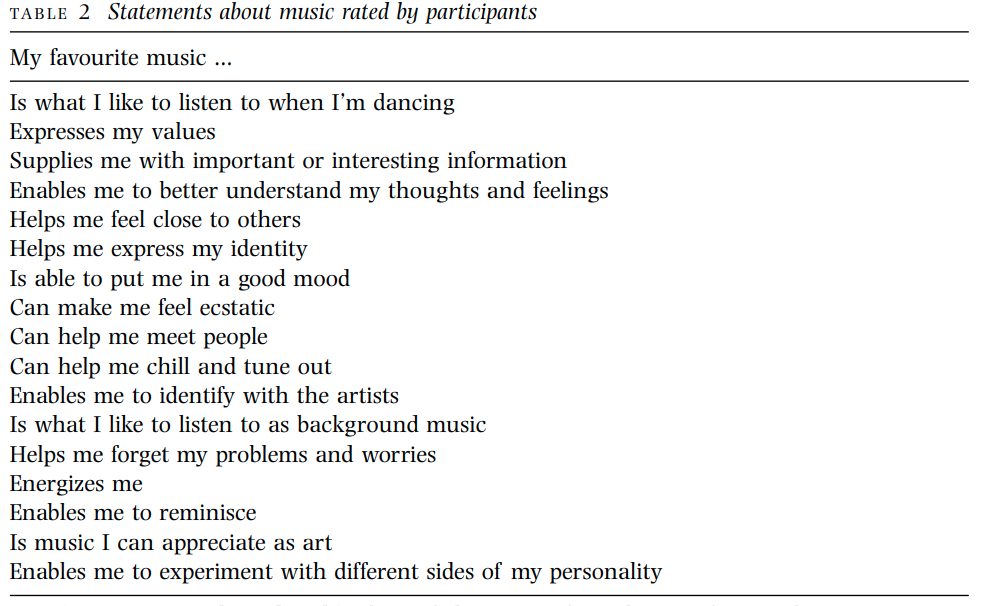

Another dimension of studying music daily use is understanding the listening intent. The intent refers to a specific function of music that the listener is seeking. While the listening intent can be influenced by the situation, it can also be different for the same situation. Understanding the listening intent can help us comprehend the music style/content the listener is seeking. An example of different functions of music reported by listeners in one of the studies 2 can be found in the following table. While these studies show the level of complexity in selecting music, it helps to narrow down the problem to tangible factors. For those interested in this line of research, here are some references for further readings 1 2 3.

Reported statements of participants on their intent from their favorite music. From the study by Schäfer et al. 2

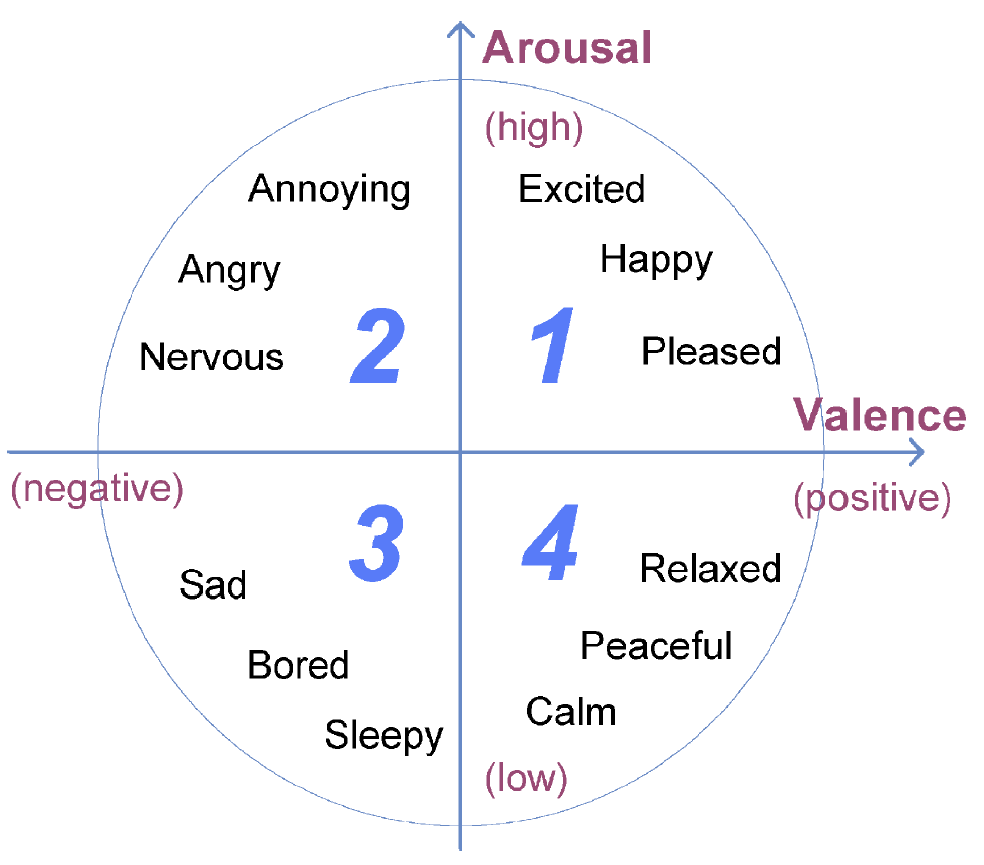

However, to properly study this influence on the music content, we needed to make progress in information retrieval tools. In the information retrieval domain, researchers have been working on analyzing the music content and finding means to describe and categorize it. One of the prevalent descriptors of music is what is called the valence-arousal scale. This scale enables us to narrow down a “music purpose”, i.e. the function of a music piece, by describing its emotional effect through two dimensions: arousal and valence. Researchers develop intelligent models that can rate a music piece on this scale by analysing its audio content. The figure below shows this valence-arousal space. However, we must remember that this is among the simplest ways of describing music through only two descriptors.

The Valence-Arousal scale. Figure from Du et al. 4

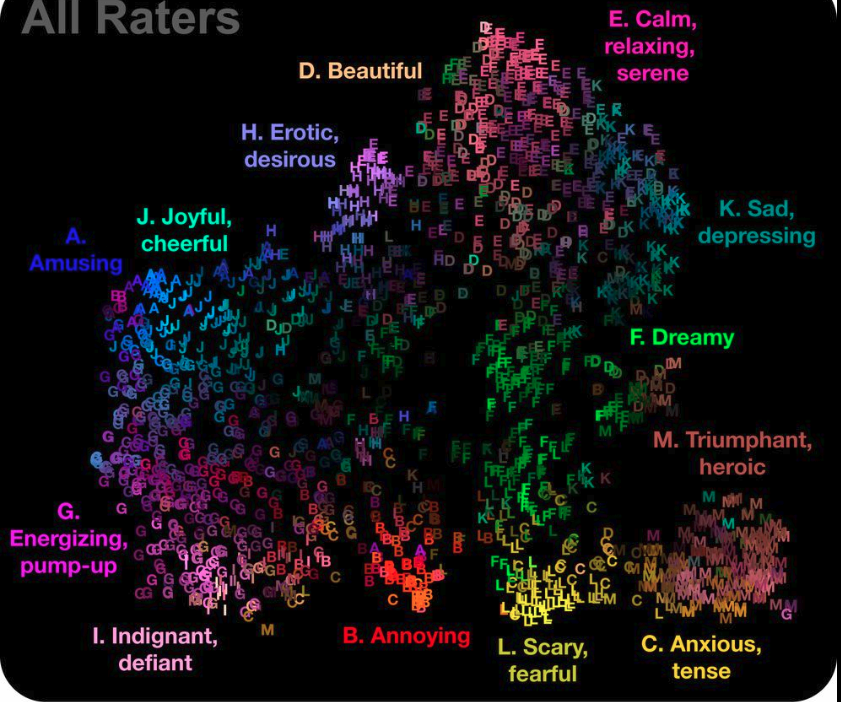

More complex methods rely on embedding music, either through content similarity or through frequent co-occurances, into a high-dimensional space. This representation enables us to describe and explore music using more abstract features. While less interpretable, those representation embeddings have proven to be a useful tool to describe the content of the music based on certain criteria. An example of an embedding space projected down to 2-D showing how similar music is clustered together is shown in the figure below. Another way we recently proposed is to learn this representation of the music according to each user independently, by learning how different listeners “use” the music 5. Now that we have a proper way to represent and retrieve music through its contents, and have an idea of how listening preferences are influenced by different factors, we can put our findings in use to provide contextual recommendations!

Visualization of music representation and their reported feelings by Cowen et al. 6

Finally, in the machine learning domain, researchers have been trying to develop intelligent systems that take all this different factors in consideration and provide relevant and timely recommendations. With such complex requirements, studies have been continuously proposing more sophistcated recommendation systems. Since we do not have space to describe all of the methods, here are some examples of specialized contextual recommenders and generic recommenders. From specialized recommenders, one approach 7 uses the emotion descriptors to match music to places-of-interest, which could be of use for a mobile travel guide, for example.

A more complex and generic contextual system uses all data available in an online streaming service to predict the content of a new listening session 8. This system considers both the current user situation, through the used device and time of the day, and the user’s listening history and global preferences to retrieve tracks most suitable for the new session. These tracks are represented in an embedding space similar to the one described earlier. These models are trained on millions of data samples and different users to better learn how to model a contextual session for any user. Those more interested in contextual recommendation systems can refer to this detailed review 9.

Conclusion

In this article, we explored how one very common problem requires efforts from multiple different domains put together to tackle it. This is multidisciplinary research.

References

-

F. Greb, W. Schlotz and J. Steffens, “Personal and situational influences on the functions of music listening.” in Psychology of Music 46.6 (2018): 763-794. ↩ ↩2 ↩3

-

A. North and D. Hargreaves, “Situational influences on reported musical preference.” in Psychomusicology: A Journal of Research in Music Cognition 15.1-2 (1996): 30. ↩ ↩2 ↩3

-

T. Schäfer, and P. Sedlmeier, “From the functions of music to music preference.” in Psychology of Music 37.3 (2009): 279-300. ↩

-

P., Du, X. Li, and Y. Gao. “Dynamic Music emotion recognition based on CNN-BiLSTM.” in IEEE 5th Information Technology and Mechatronics Engineering Conference (ITOEC), IEEE, (2020). ↩

-

K. Ibrahim et al. “Should we consider the users in contextual music auto-tagging models?” in International Society for Music Information Retrieval Conference (ISMIR), (2020). ↩

-

A. Cohen et al. “What music makes us feel: At least 13 dimensions organize subjective experiences associated with music across different cultures.” in Proceedings of the National Academy of Sciences, 117.4 (2020): 1924-1934. ↩

-

M. Kaminskas and F. Ricci, “Emotion-based matching of music to places.” in Emotions and Personality in Personalized Services, Springer, Cham, (2016). 287-310. ↩

-

C. Hansen et al. “Contextual and sequential user embeddings for large-scale music recommendation.” in 14-th ACM Conference on Recommender Systems, (2020). ↩

-

A. Murciego et al. “Context-Aware Recommender Systems in the Music Domain: A Systematic Literature Review.” in Electronics, 10.13 (2021): 1555. ↩